Every so often, a big study comes out about potential benefits of drinking things like wine, champaign, and coffee. When the results somehow paint a picture of these different drinks being good for our health, it confirms our natural bias to love them and we celebrate!

The problem is, most of the time we are taking home the wrong message.

In the latest study, Loftfield et al., (2018) studied over half a million people, and examined the relationship between coffee consumption of all kinds and mortality. Their results showed that there was indeed a link between one of the world’s most favorited drinks and reduced mortality. However, there is more to this study than appears in the headlines.

Like every study, this one has important limitations. First, out all of the people surveyed, only 5.5% of people responded, which creates “evidence of a ‘healthy volunteer’ selection bias.” It’s sort of like when Fox news puts out of a survey, or MSNBC puts out a poll– they will likely only get responses from people who are conservative or liberal, or people from extreme viewpoints on the topic. It isn’t really representative of the population, so statements made about this relationship to the general population is a stretch. Additionally, the claims to lowering mortality are made to “all-cause mortality” which means death by any cause. It is a little odd because you wouldn’t necessarily think that coffee would protect your life in a car accident, but the relations between coffee consumption and, for example, stroke, colorectal cancer, and female breast cancer were not statistically significant.

While these are some pretty serious limitations in my book, I still consider this study a solid effort by researchers to understand any link between coffee and mortality (though a preregistration would have been nice). But the bottom line is that this type of research is very hard to conduct, and as consumers of research, we need to keep the limitations in mind when we make inferences from it. The authors did a good job at being transparent about this fact, and end their paper by stating, “Our results are based on observational data and should be interpreted with caution.”

What this boils down to is the old Latin phrase “post hoc ergo propter hoc” which means “after this, therefore because of this.” Simply put, correlation does not equal causation.

Participants in this sample were not randomly assigned to drink coffee and there was no control group. Therefore, we cannot make statements about how coffee causes any effect on health. Let’s walk through an example to illustrate this point. Let’s imagine, for example, that coffee has no effect on mortality, but that people who drink coffee/more coffee are more likely to wake up early to go exercise, or drink coffee to help them stay awake in during their office job (an occupation that with little physical/chemical hazards that often includes health benefits). Let’s say that it was actually the exercise and health benefits that warded off mortality. If this was true, a correlational experimental design would more or less lump the true cause of reduced mortality (exercise and health benefits) with coffee consumption, but all we would know is that coffee consumption is linked to reduced mortality, even if coffee had no effect on mortality. If we imply causation, we falsely claim that coffee had an effect on mortality, when in fact it was exercise/health benefits. We would be wrong.

This is why we cannot make causal claims from correlational designs. Researchers do their best to control for things that seem like obvious confounds (in this study, they controlled for smoking habits, self-reported health, and some other variables) but you cannot control for everything that might have an effect or an interaction with something else. It’s impossible. Therefore in science, causal inference is reserved for the most rigorous experimental designs. And sometimes not even then.

If you learned about this study before this post, you likely heard about it from the media. The media sometimes does a good job at maintaining the message of a study, such as evident by these tweets from People magazine and ABC News (Note: I’m forgiving of the fact that they failed to mention the representativeness of the sample, because we can only ask so much).

There is no causation statement made, and the outcome variable (risk of dying) is more or less maintained (although, it’s unclear what dying “early” means). Good job!

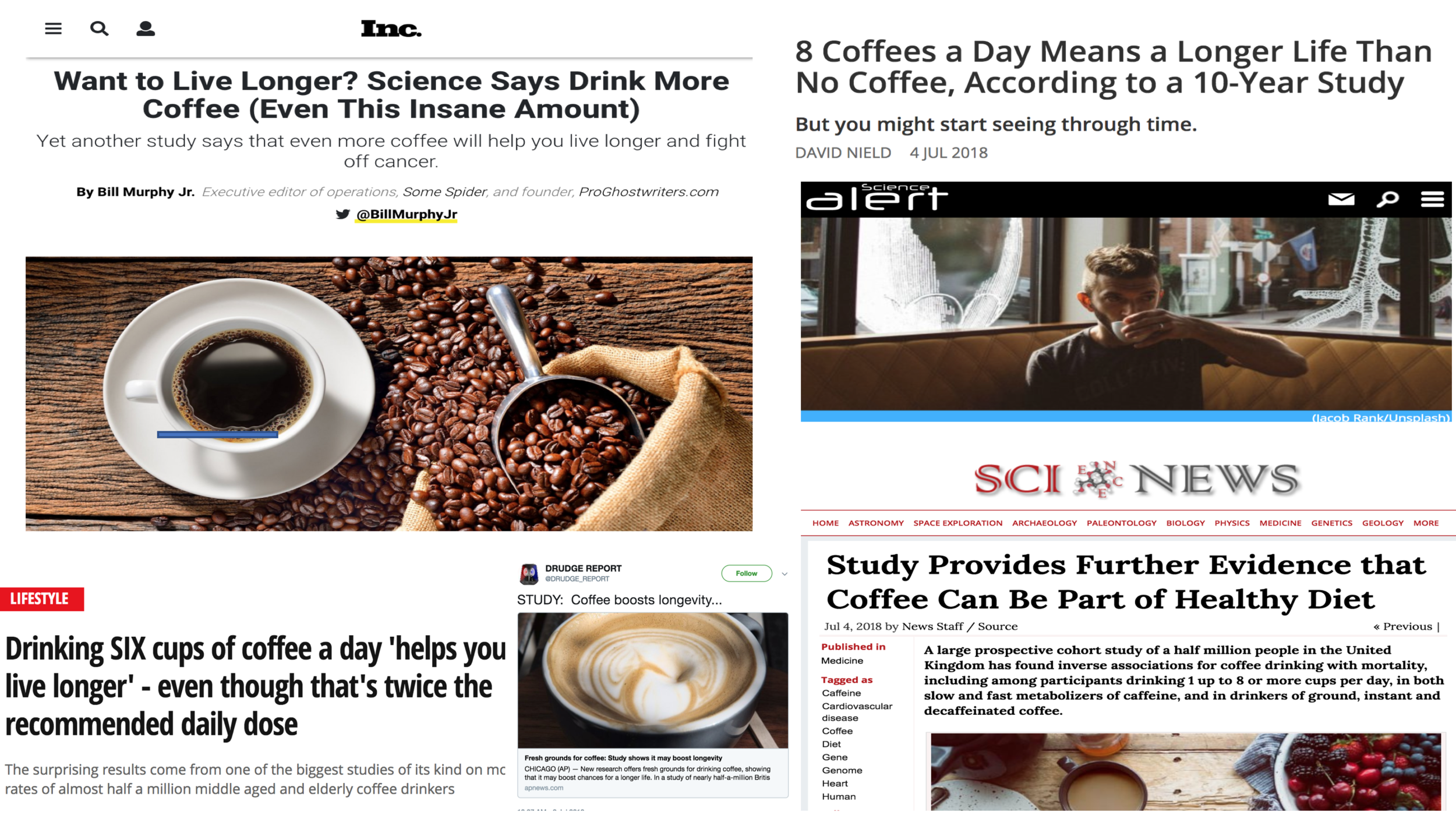

However, for every decent headline that refuses to extrapolate correlation into causation, there are those who dare. And they are everywhere.

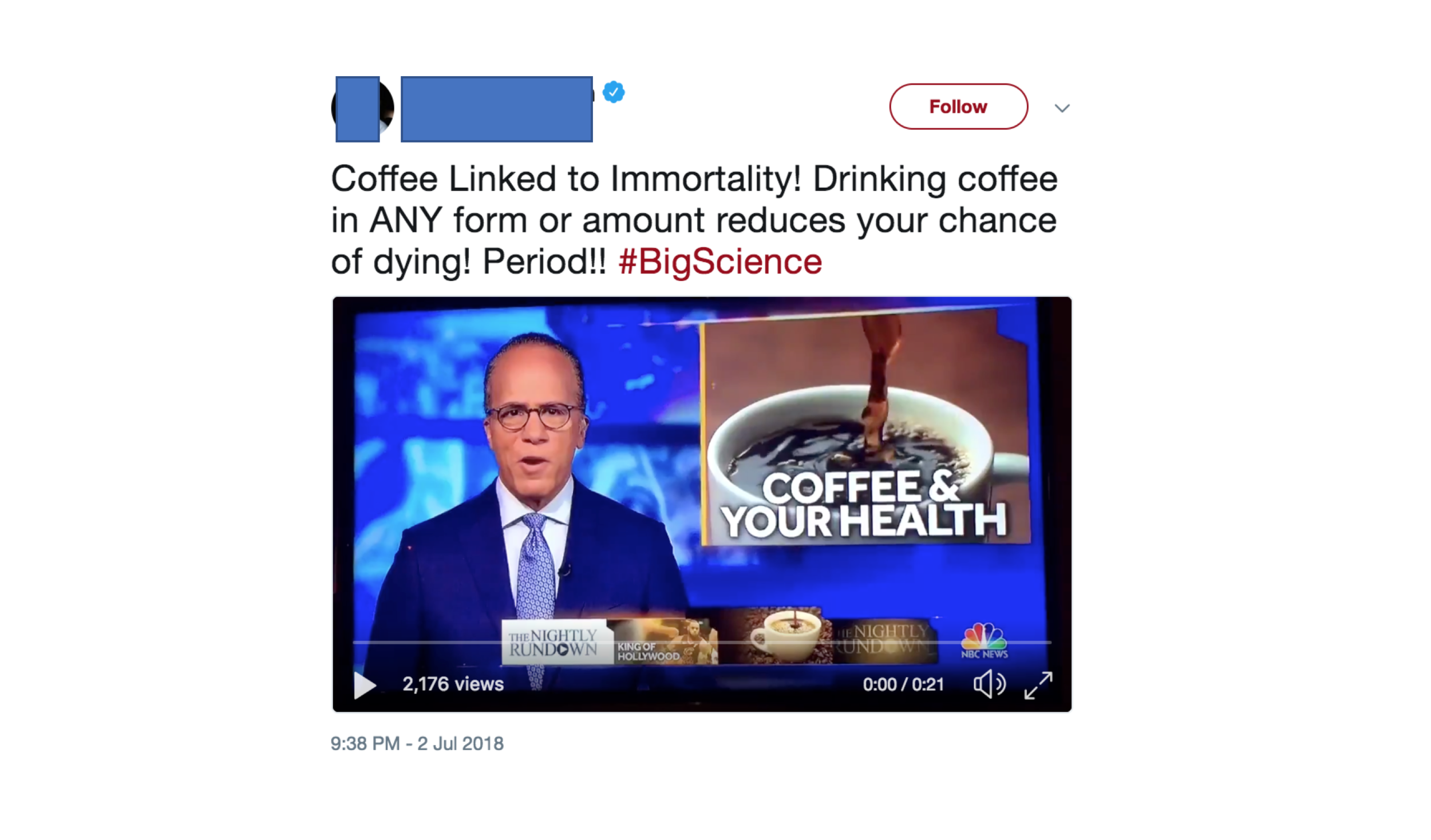

These headlines fail to convey to the reader the true nature of study, and even make false claims about its conclusions!! These distorted accounts of the actual study dodge the efforts by the researchers to carefully consider the conclusions in relation to the design of the study (“Our results are based on observational data and should be interpreted with caution”). The maelstrom of causal headlines and post-hoc fallacies related to this study work together to confuse readers, and give them the space to make sweeping statements that are blantantly wrong. In fact, there was one tweet I ran across that was so bad, that set me off enough to push back my day an hour and a half to write this article.

Let’s set the record straight.

-

Coffee was only linked to risk of mortality, nothing will make you live forever.

-

We don’t know that coffee will reduce your risk because it’s correlational data with strict limitations due to a very poor response rate.

-

This is not the end of this debate because there still needs to be random assignment, future studies with higher response rate, etc. to understand the connection more clearly.

Conclusions: When reading headlines of the latest studies, be critical, because it is quite often wrong. To be a better consumer of research, ask yourself these three questions whenever a new flashy study pops up–1) Was it a correlational study or an experimental? 2) How many participants were there? 3) Were the participants representative of the population? Thinking about these factors in terms of conclusions and inferences made by the study and the media (which can be completely different), and make you a better consumer of knowledge.