Earlier today, I read an article published on Washington’s Blog titled “Fear of Terror Makes People Stupid.” The central claim of the post was that the government purposefully induces fear of a terrorist attack in order to get Americans to relinquish more of their civil liberties (see: Patriot Act), but this fear is silly (so the post claims) because we are so much more likely to die from things like heart disease, car accidents, and (in fact) hot weather than from a terrorist attack.

Now, I’m not here to defend or deny what the government does or does not do, but I do want to talk about this “irrational fear” and how it represents a misunderstanding of probabilities.

You may have seen previous posts on this site about Bayes’ Theorem, but if you haven’t, here’s a quick and dirty explanation. This guy Bayes (1701-1761) figured out that our estimates of the probability of certain events are influenced by our prior beliefs about the likelihood of those events. In other words, if I wanted to judge the probability that my runny nose means I have a cold, I would take into consideration both how likely it is that someone with a cold has a runny nose, as well as my prior beliefs about how likely it is to get a cold (before knowing the symptoms) and how likely it is to get a runny nose (whatever the cause).

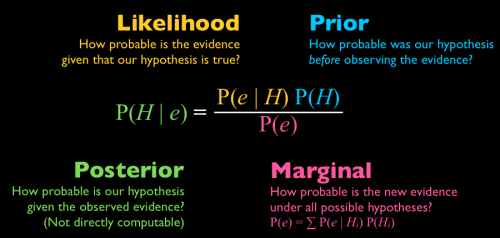

Bayes’ Theorem is written here using H (for hypothesis) and e (for evidence). So in this little scenario, the hypothesis is ‘having a cold’ and the evidence is ‘having a runny nose’. Then Bayes’ theorem looks like this: And in plain English, you would read it like this: “The probability that the hypothesis is true, given the evidence, is equal to the likelihood of the evidence occurring when the hypothesis is true, times the probability of the hypothesis being true before seeing any evidence, divided by the probability of the evidence occurring under all possible hypotheses.”

Or in the cold scenario: “The probability that I have a cold (given that I have a runny nose) is equal to the probability of having a runny nose when I have a cold, times the probability of having a cold (regardless of whether I have a runny nose or not), divided by the probability of having a runny nose (regardless of whether it’s caused by a cold or something else).”

Summary:

P(H|E) = the probability that I have a cold, given that I have a runny nose

P(E|H) = the probability of having a runny nose when I have a cold

P(H) = the probability of having a cold, without knowing what my symptoms are

P(E) = the probability of having a runny nose, whatever the cause may be

Bayes believed that people subconsciously estimate these different probabilities when they judge how likely an event or hypothesis is to occur/be true. In the case of colds and runny noses, we’re pretty good at this and our estimated probabilities tend to match reality. But a lot of research has shown that there are situations in which our estimates are wildly off, especially when those events are rare but come to mind easily. The problem is that people tend to misjudge the prior probability of a hypothesis, relying on salient memories rather than the true rate of occurrence, or “base rate”.

Here’s an example of how that happens:

We’re all familiar (presumably) with airport security. When you put your carry-on bags through that x-ray machine, the security staff are looking for bombs (among other things). Now let’s acknowledge that this x-ray test is not perfect, so when there IS a bomb, it gets detected 98% of the time, and when there ISN’T a bomb, the staff will think they see one about 1% of the time. Let’s also acknowledge that only 1 person in a million actually DOES have a bomb in their bag (that estimate is probably high, but let’s just run with it).

So you’re at the airport and a bag down the line in front of you comes up positive with this test (the staff believe they see a bomb). What’s the probability that the bag ACTUALLY contains a bomb? Intuitively, you probably think the chance is pretty high. You might think to yourself, “Well the test catches almost all of the bombs (98 out of 100), and it has a very low rate of false positives (only 1 in 100), so if that test is positive, there must be a very high probability that there is, in fact, a bomb in that bag.” Sounds pretty reasonable, right?

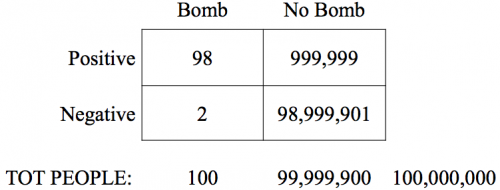

Well, it turns out you’re wrong. There is approximately a 0.01% chance that the bag actually contains a bomb. And the reason you’re wrong is because it’s very hard to take into account the one-in-a-million base rate: how likely is it that anyone has a bomb, whether the test is positive or not? Here’s a diagram of what’s happening to help us think it through:

First, look at the bottom row. Let’s say there are 100,000,000 people and 100 of them actually have bombs in their bags (that works out to one in a million). Now, out of those 100 people with bombs, 98 get caught in this test but 2 of them get away (reflecting the 98% accuracy). We also said that 1% of the time the test comes up positive for people who don’t have bombs. So in that top right box, we have 999,999 people, which represents 1% of the 99,999,900 people who don’t have bombs.

Still with me? Now we use the Bayes’ rule to estimate the likelihood that a positive test result actually catches a bomb. Remember, the hypothesis (H) is that there’s a bomb and the evidence (E) is the positive test.

Well the likelihood, p(E|H), is the chance that there’s a positive test when there’s a bomb. That’s equal to 0.98 (or 98%). And the prior or base rate, p(H), is the probability that there’s a bomb in any given bag (regardless of the results of the test). We said the chance of that is 1 in 1 million, so that works out to 0.000001. Lastly, the marginal probability, p(E), is the probability that the test is positive (regardless of whether there’s a bomb or not). And that’s equal to all the positive tests (98 + 999,999) divided by all the people (100,000,00) and works out to 0.01.

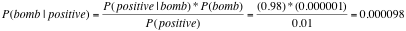

When we plug those values into Bayes’ Theorem we get:

So there you have it: just one hundredth of one percent chance that a positive test actually means there’s a bomb. This example shows that it’s really hard to mentally estimate the probability of an event, even when you have all of the necessary information to do it with a calculator. And we have a tendency to assume that the P(bomb|positive) is equal to P(positive|bomb). As you can see from this demonstration, however, those probabilities are QUITE different when you take the base rate into account.

Okay, so… what now? Does this just explain why so many innocent people are frisked at security checkpoints? Well, yes. But it also tells us about our tendency to inflate the likelihood of disastrous events. Take a look at that base rate: one in a million is pretty rare. But people are much less susceptible to this inflation when the context changes. For example, winning the lottery is just about as rare as being attacked by terrorists, but anyone on the street can tell you how unlikely it is that you’ll win the lottery this week.

One explanation for why this happens is that the salience of an event (i.e. how easily examples of it come to mind) significantly affects our judgment of base rate. For example, images of plane crashes come to mind easily because they are often shown on the news and we have strong emotional reactions to them. So you are likely to overestimate the base rate of fatal plane crashes. Heart disease, on the other hand, is much less salient as a cause of death, and so you are likely to underestimate the base rate of heart disease in the population. So Bayes’ rule actually helps us account for why people have irrational fears: if you have false beliefs about the prior probability of an event, then you’re going to estimate a false posterior probability.

In essence, it’s not that Bayes’ rule fails us in certain situations and prevents us from gaining a realistic picture of the world, but rather that it accounts for our mismatched predictions by showing that irrational beliefs lead to inaccurate estimates. Note, as well, that a lack of salience can also contribute to inaccurate estimates: severely underestimating a prior probability is equally as problematic as overestimating it – for example, the underestimated prevalence of diabetes can lead us to inaccurately predict a small posterior probability of developing the disease ourselves.

To bring it all full circle, consider that fear mongering increases the salience of feared events and causes us to overestimate their likelihood. When something we fear feels like it is likely to happen, we become more willing to do things to stop it (again, see: Patriot Act). Whether our government does this intentionally or not is not something for me to say here, but I think it is more than fair to say that the attacks of September 11, 2001 significantly increased the salience of terrorist attacks and that continuing fear probably contributes to a general overestimation of the likelihood of a future attack.

The deeper question is: how willing are we to live with laws that create an increase in false positives (innocents accused) in order to avoid missing fewer of those who are dangerous?