You might have heard that Avatar is responsible for a whole new kind of movie technology and that thousands of theaters had to be built or upgraded just to show these movies. What exactly are these technological miracles and if they’re so great, why do we still have to wear those silly glasses?

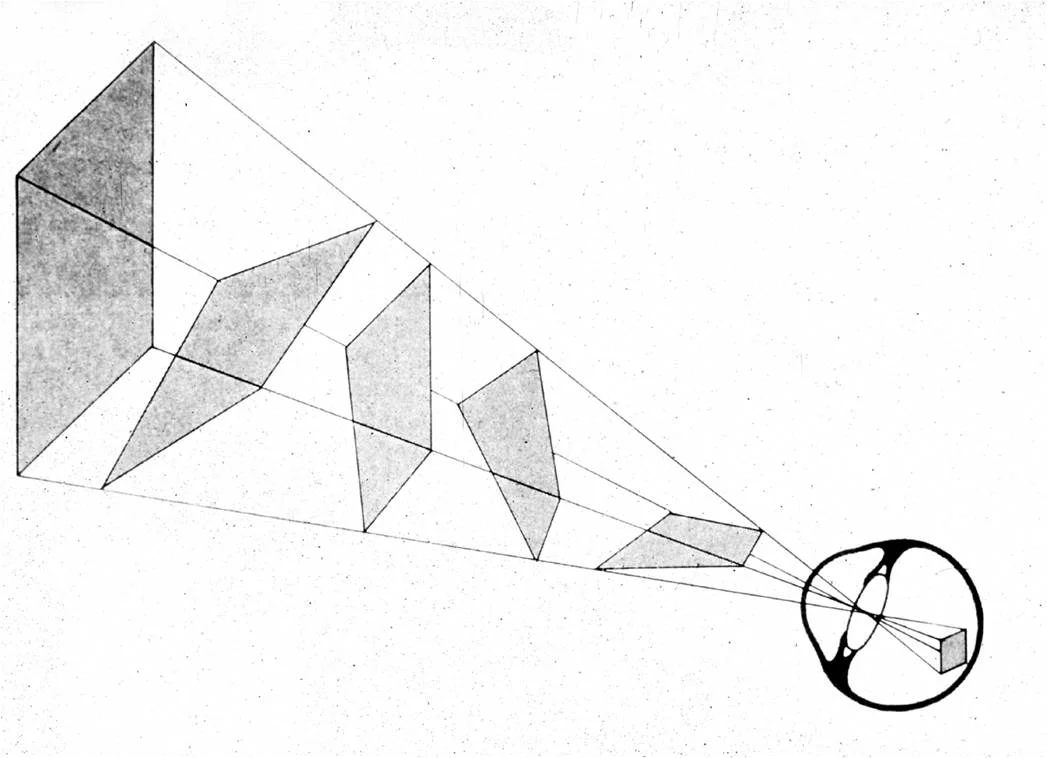

First of all, I want to point out that seeing in 3D is very hard. Sure, we do it all the time, but it’s actually a quite tricky process. Think of your eye like a regular camera. Light comes in through the pupil or aperture, is focused by the lens and then hits the back of our eye, which is called the retina. The retina, like the back of a camera, is a flat, rounded surface. That means that 3D objects in the world are represented in 2D on our retina. Our visual system, then, has to take this 2D signal and build back out of the 3D object that caused it. This is a difficult problem because lots of different 3D objects can produce the exact same 3D projection:

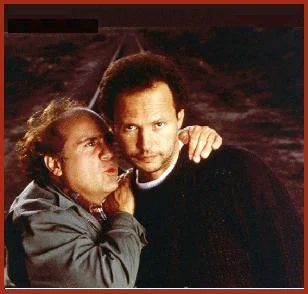

Irrespective of the inherent difficulty, our visual system does a pretty good job. Even when we go to the movies and watch something on a 2D screen, we have a fairly good understanding of where things are in depth. Think about the last movie you saw. Were you ever confused about which object was supposed to be closer to you/the camera? Or which objects were closer to each other? Below is a still of Billy Crystal and Danny Devito from Throw Momma from the Train. Even without a $20 movie ticket and fancy glasses, you should be able to tell that Billy’s head is a little in front of Danny’s and that the two of them are in front of a set of railroad tracks that recede into the distance.

There is a distinct sense of depth in the image. Evolutionarily, this ability is extremely important – knowing how close something is to you or how apart two things are in depth is critical for, say, swinging from branch to branch, reaching for fruit, or seeing whether you can successfully jump over the ditch or not.

One of the things that helps our visual system understand where things are is by how much the lens in our eye focuses. Just like in a camera, the lens focuses light. It changes shape to focus light differently depending on where the light is coming from. This is called accommodation, or the current focal position of the lens. Close one of your eyes and hold your finger out in front of you. If you focus on the finger, the things behind it will become fuzzy. Without putting your finger down, focus on the background. Now your finger should double and appear out of focus. This happens because muscles in your eye stretch or relax changing the shape of the lens. Knowing how much these muscles are stretched tells your visual system about how your lens is focused and whether the light that is being focused is coming from far away or from nearby. Unfortunately, even in 3D movies, you still look at and focus on a flat screen so that information won’t help you see depth.

However, there is a whole slew of information in the world that can help. These clues in the environment, or depth cues, tell us how far things are away from us (absolute depth) and how far apart they are from each other (relative depth). Here are some examples:

Occlusion: When a part of an object is blocked by another object, this tells us that the blocked object is behind the one that is visible. Devito’s right arm is in front of Billy’s sweater.

Shadow: Billy casts a shadow on Devito, not the other way around, suggesting that he is in front of him (based on where we infer the light source to be). In general, we expect light to come from above; this helps us understand whether objects are concave or convex. For example, makeup works the same way: if you want to emphasize your cheekbone, you would put a light color above it and a dark color below it to simulate light hitting it; this makes the bone look more prominent.

Perspective: The railroad tracks appear to get closer to each other higher up in the picture; however, we know that they are really parallel. The fact that they approach each other is a sign that they are receding into the distance. This is also called a pictorial cue.

Atmospheric Perspective: The top part of the picture is a little out of focus and foggy. That could be because of picture quality, but, in general, things that look hazy or out of focus are generally farther away.

Relative Height: Things higher up in pictures tend to be farther away. This is another pictorial cue.

Relative Size: There is no example of this in this picture. If there are two equally sized objects, say two quarters, then the one that is closer to us would appear larger than the one that is farther away.

There are also a few cues that we get from motion and moving objects (like parallax and dynamic occlusion), but I won’t go into them here.

All of these cues are available in regular photos and the motion ones can be seen on TV or in movies, so what’s special about 3D film? You might have noticed that all of these things can be seen in the environment with just one eye – they are monocular cues. If you close one of your eyes and look at the picture, it should look exactly the same. Partly, this is because no matter where you look in the image, your lens’ focus doesn’t change (accommodation). However, the same is true in the movie theater. The added sense of depth, both in 3D movies and the real world comes from the special property of seeing the same part of the world with two eyes.

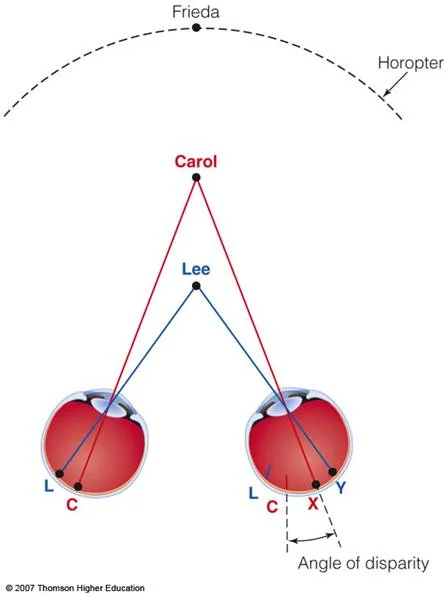

Take your finger and hold it up in front of you again. Alternate between closing your left eye and your right. You should see slightly different parts of your finger depending on which eye you close. The difference between what you see in your two eyes is called binocular disparity and the information about depth provided to your visual system by that difference is called stereopsis. Knowing only the distance between your eyes, the difference between what each eye sees, and the distance to where you are fixating (accommodation), the visual system can recover the depth of a scene. How much the two eyes angle toward each other is called convergence, and this is also a hint about where objects are located in the world.

Remember the “old” 3D movies with the red and green glasses? They would show the same green and red picture, slightly shifted, on the screen. Each lens of the glasses would filter out one of the images, so each eye would see only one color picture. This would trick your brain into thinking that each eye is seeing the same thing from slightly different positions and would result in a perception of depth. If you’ve heard of a stereoscope, this is exactly the same idea, except in a stereoscope you would typically see stationary photos and here you could show a movie.

But these new 3D movies look even more 3D than those red and green ones! That’s because the red and green movies would use one camera to film the movie, then they would double each frame and project them over each other onto the same screen. That means that each eye would be getting exactly the same information! In reality, each eye sees slightly different things. The cool thing about the new movies (or at least Avatar) is that they are filmed with two cameras, about eye-distance apart so that the same scene actually looks a little different depending on which camera you look through.

In addition, they automated the cameras to angle in our out depending on how far away the focal point is. For example, if they are filming a closeup, the cameras would angle inward just like your eyes. Hold your finger out in front of you and slowly move it closer to your nose, focusing on it all the while. You should feel your eyes turning toward each other as it gets closer. The same thing happened with the cameras. The result is two shots of every scene, shifted a little bit depending on whether it is shot with the left or the right camera and adjusted depending on whether the objects are near or far. This gives an added sense of perspective to each shot.

There are different ways of getting the two images to the two eyes. The red-green glasses allowed only one of the color images to get to one of the eyes, while the other saw the other color image. Alternatively, there are shutter-glasses – the glasses are synchronized with the projector so that when the image for the left eye (shot with the left camera) is projected, the right eye lens is “shuttered” or blocked off and vice versa for the other eye. Since the frames are shown so quickly, your brain integrates the information and thinks that what your left and right eyes are seeing is there at the same time. The visual system then uses the differences between the two eye images, the perspective cues created by turning the cameras inward, and the rest of the monocular cues to give the sensation of depth. What seems to be used now are polarized glasses. There is a decent article on wikipedia that talks about 3D polarized glasses (see, for example, http://en.wikipedia.org/wiki/Polarization_%28waves%29#3D_movies). A special lens needs to be installed in front of the projector to display the two eye images on the screen. Just like with shutter glasses, often two polarizations are interleaved. A special screen is also needed.

This new technology is not entirely perfect. For one thing, the focal point does not change – the two images are still projected onto the same screen at the same distance from your eyes. This can cause headaches for some people. And this is why it’s still not quite as 3D as the real world. Also, stereo vision is only good for a few feet, so elements of the scene that are more than about 20 feet in depth (most of the background) will appear as if on one depth plane, that is, very flat. There can, therefore, be a rather jarring jump in depth between the foreground and background. While I think this is a cool way of filming and showing movies, there is no way that this technology will ever come into our homes glasses-free.

Incidentally, not everyone can see these movies in 3D. Some people are “stereo-blind.” This means that their brains use only the information from one eye. This might occur because their eyes are incapable of converging or focusing on the same point in space. There is an interesting article by Oliver Sacks in the New Yorker called Stereo Sue about just such a person who was stereoblind, but, with training, was able to learn to see in stereo.